Guardians of the Galaxy Vol. 2 , My work from 0.43 to 0.47. Debris and dust simulation .

Studio, Trixter.de

VFX Technical Director & Developer

Guardians of the Galaxy Vol. 2 , My work from 0.43 to 0.47. Debris and dust simulation .

Studio, Trixter.de

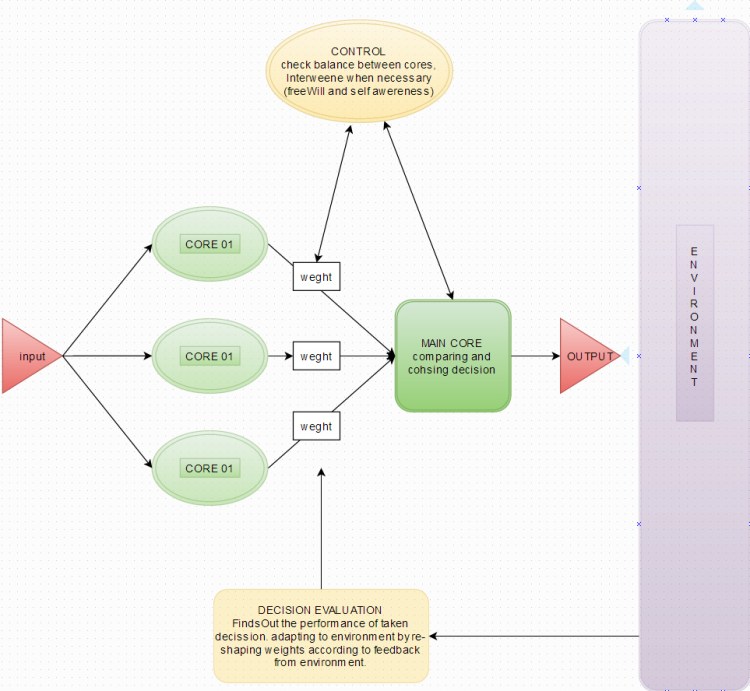

tradeRunner is trading platform I have been developing since the beginning of the year. At the beginning the idea was preparing order-automation tool for a friend, then introduced one algorithm for finding repeated trade patterns. For testing and improving algorithm performance started to develop extra modules, which at the end turn to be full functional trading platform.

Except “numPy” and “pandas” all libraries are created from scratch for the platform . It was not very efficient way as there are already solid platforms. Still I decided to go more experimental and fun way. Above, is the simplified pipeline of the model.

main modules>

dataFlow> communication between modules are achieved with fallowing data blocks and files

performance output>

evolutionAlgorithm>

platform is using evolution algoritm(EA) to maximise it’s performance. Current one is 2dimensional-EA (altering one input, checking performance change, finding highest performing parameters). However as core algorithms using multi dimensional input, it is not very optimised. Currently I am trying to to develop new model on, which will be handles on next post

hardware>

On PC there is no limitation of simultaneous running algorithms, however raspberry-pi has limited CPU & memory capasity. Code optimization and having additional fans, improved the setup. However memory is still issue. will run second optimization for decreasing memory purposes.

FutureImprovements>

Recently I was working on platform game named “Octo”, Most of coding has finished, Here is the main character. Artstation Link

EvolutionBox is a Unity-based simulation where I explore evolution as the key mechanism for learning. The first steps focus on creating agents that interact with their environment and evolve over time. In future updates, I’ll dive deeper into pattern recognition and self-learning.

The simulation mimics three core principles of evolution:

Agent Goals:

Every agent starts with the same set of traits and two main objectives:

Each new generation carries mutated traits, and the agents best adapted to their environment are more likely to survive and pass on their genes.

Agents inherit the following traits:

Agents decide what to do based on their priorities and current needs. They calculate the “weight” of each possible action and choose the one that feels most urgent. For example:

While agents’ decisions change depending on their situation, their core priorities are set at birth. Each new offspring inherits priorities from their parents, with a mutation factor that adds variability.

Over time, this process creates agents better adapted to their environment.

This simulation demonstrates how simple rules of evolution can create complex behaviors

.

————————————————————————–

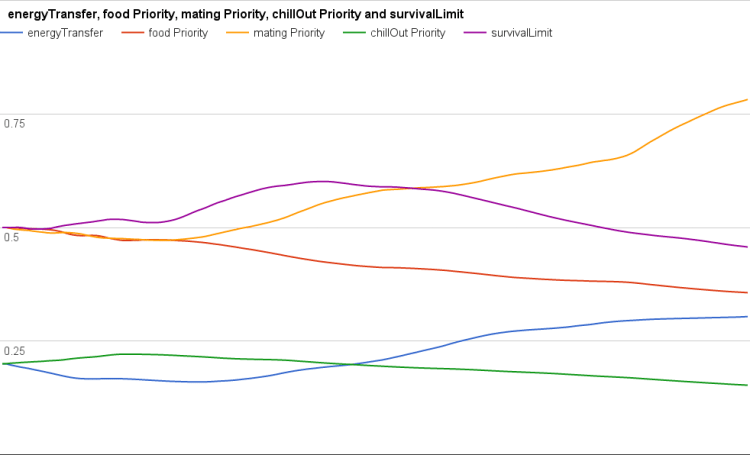

Results:

After 200,000 cycles of evolution under default parameters, here’s what the data shows:

Currently, agents have basic pre-programmed knowledge about categories like “self,” “other agents,” and “food.” For example, if an object is labeled as “food,” the agent inherently knows it can eat it.

In the next version, I aim to integrate a neural network system for each agent. This system will allow agents to:

Over time, agents will draw conclusions from these patterns, improving their survival skills. Some of this learned knowledge may even be passed down genetically, resulting in smarter agents overall.

The current version focuses on instinctive behaviors like searching for food and mating. Future updates will introduce:

Some nice references:

http://www.vice.com/read/sorry-religions-human-consciousness-is-just-a-consequence-of-evolution

http://faculty.philosophy.umd.edu/pcarruthers/Evolution-of-consciousness.htm

http://spectrum.ieee.org/automaton/robotics/robotics-software/bizarre-soft-robots-evolve-to-run

http://www.huffingtonpost.com/the-conversation-us/evolving-our-way-to-artif_b_9183434.html

Oasis Commercial Project. Project has been done on Mecanique General , I was responisble On Character Rigging

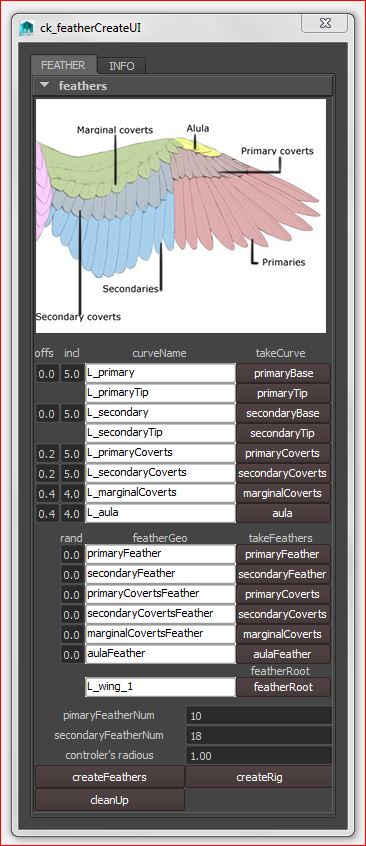

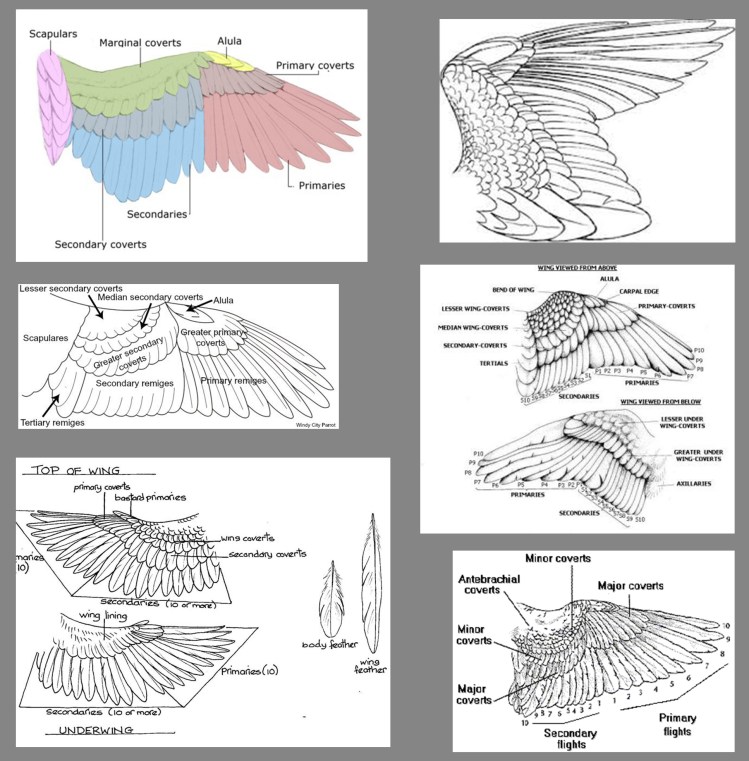

After couple of bird rigging projects i prepared wing & feather automation tool. It seems like most of the birds have same pattern of feather segments with variation on number of feathers in these segments.

Intro ck_featherCreate_1.1:

ck_featherCreate is maya rigging tool for wing and feather automation.

How to install:

Working with Base scene:

How script works(underHood):

Limitations & future Improvements>

Version History

References:

In facial rigging, corrective blend shapes can become very tedious and time consuming process. Sometimes it take more effort to arrange and connect blend shapes than actually sculpting them. So wrote a script for standardizing and making process easier.

Limitations :In current script target geometry should not have any outputs. Still working by disconnecting outputs before creating or editing shapes need. Also being able to create corrective for more than two target shapes would be better. Finally, for mid weights correctives will be applied in future version.

Demo: from 4.25 to 5.20

How it works

1-select base geometry with one blendshape node

2-update UI

3-select first bsSource

4-select second bsSource

5-press create/edit button for pose correction,

6-press done

Lately i had a project about growing plants in VR environment. With help of any “L system based software” it is fairly easy task however platform was unity and unity can’t import changing vertex numbers. All animation had to be done joint or blendShape based. So update one of my old script, secondaryAnim

Script basically takes first selected object’s animation and transfer it to rest of hierarchy with, delay,conserve and damping inputs, Kind of fake dynamics.simple script but very helpful. Also can be used as animation randomization tool.

script Link: